Project R730 - Part 1

Warning: this post contains copious amounts of impenetrable technobabble; read at your own risk.

It’s been a while since I’ve started a project, so of course that means it’s time. As it stands, my r720 is getting a bit long in the tooth and R730s are now the same price I paid for that system. So to Lab Gopher I went in search of a great deal. For anyone who is looking for a server, that is seriously the best thing I’ve found for sorting through all the eBay cruft. As I type this, there’s an R730 with 256GB of RAM for $420 shipped, which is so ridiculous I’m tempted to buy it as a second server.

The Adventure Begins

Initially the search was a bit slow, because I was set on getting the most CPU cores and RAM for my buck. After a bit of research, it turns out that E5 Xeons have a “sweet spot” for performance at 14 rather than 18 cores. It’s also pretty rare to find a system with the exact specifications as you want, so I resigned myself to a likely CPU swap when the time came.

After relaxing my constraints that way, I ran across an R730 with 512GB of RAM at auction and the winning bid was laughably low. So I put in my maximum and wandered away for a while. When all was said and done, I ended up winning the system for $690. The thing that kills me is that if I’d waited a couple days, I could have spent $250 less and gotten a system with half the RAM. Sure, having 512GB of RAM is nice, but I would have quickly compromised for that kind of discount. Still, I got a great deal, and if I really care enough, I can sell half of my RAM and call it a wash.

Hypervisors and You

The main system accounted for, I needed an attack plan. I’ve been running Proxmox VE as my hypervisor ever since I started playing with clusters on my R710. But the way it manages LXC should be illegal, and the upgrade process is straight-up painful. The upgrade wiki from version 6 to 7 is outright terrifying, and I’ve already been through that experience once. Since I’d be migrating anyway, why not consider another platform too?

Everyone knows I’m a huge fan of ZFS. TrueNAS SCALE is probably the most notable available hypervisor platform that supports and directly integrates it. As an added bonus, it provides a whole K3s Kubernetes cluster for the Docker application environment. I would no longer have to manually create a VM for NextCloud, Pi-hole, or any of the other things I use regularly. And because it’s a NAS, the same goes for Samba, NFS, or any other file-sharing activity. Integrated cloud backup? Automated ZFS snapshots and syncs? All there, so no more need for sanoid and the like.

Somewhat Ironically, I created a VM with Proxmox to test TrueNAS prior to committing. After fighting with it for a few hours, I had a working NextCloud environment. Then I added a draw.io instance for fun, and Traefik so I could go to cloud.bonesmoses.local or draw.bonesmoses.local without having to supply a separate HTTP port. All of that is just scratching the surface of TrueNAS, so I decided it was at least worth a shot.

On Sorting Storage

That left sorting out storage. I’ve been subsisting on recycled server-class 1TB 2.5-inch HDDs ever since my R710. My R720 currently has 6 of them set up in a RAID1+0, and while mostly serviceable, I figured my storage situation was also in need of an upgrade. Back to eBay and I stumbled across some 1.92TB Samsung PM863 drives for $70 each. Priced per TB, that’s basically what I paid for the spinning rust drives! But how many?

ZFS has several modes of operation for storage pools. My current setup is a simple striped mirror. SSDs, even the cheap ones, are expensive enough and have sufficient performance that such a configuration isn’t optimal. So my other options are RAIDZ1, an analog for RAID-5, and RAIDZ2, an analog for RAID-6. One issue with ZFS is that they don’t make filesystem resizing easy, so if I wanted to expand my pool without making certain concessions it would be necessary to add just as many disks as I used in the initial pool.

My two options then become two 4-disk RAIDZ1 vdevs, or a single 8-disk RAIDZ2 vdev. Both would give me about 11-12 TB usable space and both could survive two disk failures, but the latter can survive any two disk failures rather than one from each vdev. On the other hand, striped vdevs are faster, easier to maintain, and I can expand the pool with four more drives in a third vdev rather than eight. With that in mind, I decided to use two 4-disk RAIDZ vdevs and if I end up regretting it, so be it.

Boot to the Head

That left one final essential: a boot device. R730 servers can basically boot from an SD card mirror, a USB device, or one of the hot-swappable drive bays. This isn’t 2005 anymore, so I wanted to avoid the SD card option, and I want to reserve my front bays for storage expansion if necessary. USB isn’t really viable either, so what’s left? It’s possible to buy PCI-E adapters for M.2 SSDs these days, meaning I could get one of those, slap two cheap/small M.2 SSDs on it, and have a mirrored boot device.

While most of these adapter cards offer two M.2 slots, maddeningly they’re not paired properly. M.2 SSD devices come in both NVMe and SATA formats, and for some reason, nearly all of these adapter cards offer one of each, rather than two of the same kind. Why? What is the point of that? After a lot of searching, I found a 4-slot adapter that had an on-board SATA controller, and I settled for that. Looking back, I could have just bought two of those split cards and then had enough slots for all of my planned devices, but I wasn’t thinking that far ahead.

SLOG Through It

I say that because of the non-essential item on my list: a ZFS Log device. ZFS has its own version of a write-ahead data journal. Because it’s a copy-on-write filesystem, every block must be written to a synchronous location as part of a full transaction before being committed to disk in case of power loss or a crash. If you don’t have a dedicated device for this, it does that on the underlying pool devices. Since these are SSDs, they have a limited number of write cycles, so you want to reduce that as much as possible. I also frequently test database software, and this kind of usage can result in higher amounts of fragmentation since it can’t buffer grouped writes as well. That, of course, is also bad for SSDs due to underlying write amplification.

The Samsung PM863 SSDs I bought are rated for a truly stupendous 2,800 TB lifetime write limit, which is something like two full orders of magnitude higher than most consumer drives. After they arrived from the supplier, I checked their write health, and was shocked to see them at their full 100% remaining capacity, so they’re essentially brand new. Despite all of this, I still want to avoid thrashing them if possible, so that meant adding a ZFS SLOG—preferably as a mirror. That meant another adapter board, and if I thought finding a SATA adapter was hard, NVME boards were even more difficult to track down because they tend to demand the full 4x PCI-E bandwidth.

I found yet another 4-slot adapter specifically for NVME cards, but two different vendors canceled the order due to supplier issues, and I gave up. I bought two single NVME adapters instead. An R730 has eight PCI-E slots, so I have plenty of expansion room, and these lesser adapters are small enough to fit into the half-height bays I’m not using for anything else anyway. That was when I realized I should have just bought two of the more common adapters; they’re cheaper, far more plentiful, and I know they work since I have one in my R720.

Link to the Past

After the flurry of ordering was over, various components started arriving. The first of these was the R730 itself, which I hurled into the basement, then haphazardly plugged the IDRAC into my switch. After assigning it an IP address, I went upstairs to finish the setup. It was also about then when I discovered that the BIOS was still on version 1.6.4, and promptly did a spit-take. No way. Impossible. That was one of the first BIOS versions on the r730 since it was released. They must have bought it the day it was released, installed it, and never touched it again until the day they sold it to me.

I’d been discussing my plans on the Homelab Discord, and one of the members there made a joke that finding an R730 which wasn’t compatible with v4 Xeon chips was akin to winning the lottery. When did they add that functionality? BIOS version 2.0.1, naturally. The rest of the firmware was equally ancient. This is the risk of buying from an auction rather than one of those tech recyclers that make sure all BIOS and firmware is already up to date. They charge a bit more, but it’s a huge time savings.

Time savings, you ask? Well, all Dell BIOS and firmware updates are cryptographically signed with a symmetric private encryption key from Dell. These often have expiration dates and are thusly updated with some frequency. Since my BIOS was from 2015, I could not install the last update from 2022, or 2020, or… you get the picture. It took me nearly two full days to update all of the firmware and BIOS on that system, because I never skipped more than two years at a time. That included the IDRAC, storage controller, SAS+SATA backplane, integrated 1GBE/10GBE nic, and even the freaking power supplies.

Inefficiencies

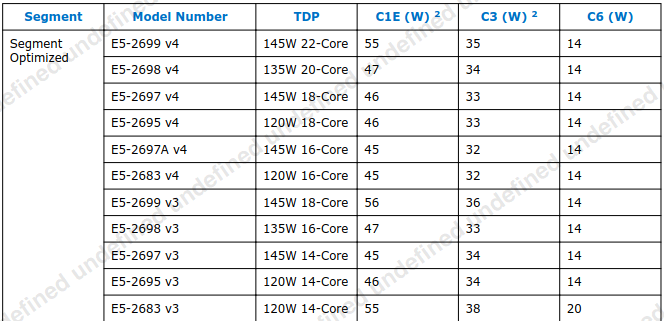

And I needed to do this because—drum roll please—the server came with two E5-2683v3 CPUs. A bit more research and I stumbled across a whitepaper on the E5-2600 processor family. Take a look at the E5-2683v3 vs the E5-2699v3 from the same version. The 2683 has four fewer cores, uses nearly as much power (55W) when at half idle, and 6W more (20W vs 14W) at full idle. In fact, it’s one of the only chips from the V3 line that uses 20W in C6 state, which is just weird. Basically the CPUs I have are 14-core, which is good, but are about as power efficient as an arc-welder compared to other chips from the same family.

Also doubles as a space heater

In order to rectify that, it meant upgrading the CPUs. Prior to receiving the R730, I’d already found several benchmarks that suggested either an E5-2680v4 or E5-2690v4 were my best bet. They both have 14 cores and are both majorly discounted compared to their higher core-count brethren. Either offer roughly the same overall performance as the higher core-count versions, but the loss of cores is a somewhat relevant concern. If I ever need to run benchmarks where processor-pinning is required, that’s sixteen fewer threads available for such assignment.

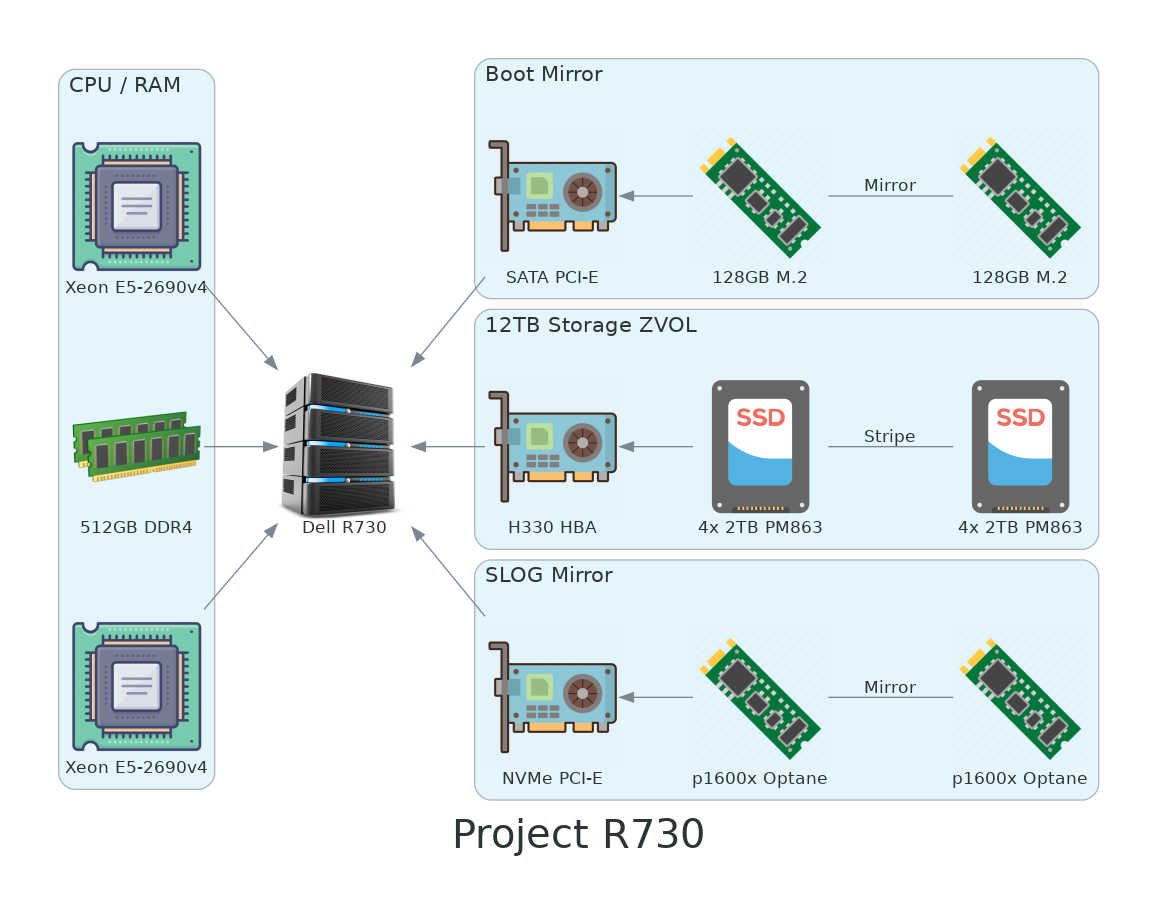

One order for two used E5-2690v4 chips and some Arctic MX-4 thermal paste, and I was done. These should arrive shortly after the PCI-E adapters, so hopefully my trips to the basement will soon be a thing of the past.

Pernicious Planning

Well… almost done. A project at work requires some diagrams, and I’ve been using draw.io for almost everything. It’s fine for the occasional one-off, but this project requires roughly 24-30 diagrams if I include all of the possible variants. I needed automation. After some internet sleuthing, I found the Python Diagrams library.

I decided to use it to create a diagram of my new R730 in an effort to learn how to best use the library. I spent basically all of this Saturday fighting with it because the layout was almost always terrible. Alignment was always crooked for some reason, or nodes were scattered all over the place, or some other problem popped up. The core of the issue is that the software can really only generate diagrams in one direction, so positioning an element above an existing group is almost impossible if you’re in left-to-right mode.

Rather than fighting it once I realized this subtlety, I decided to work within that limitation and about 20 minutes later, I came up with this:

I love it when a plan comes together

There’s also another rule that greatly simplifies things. Any edge between two nodes automatically locks them in a horizontal plane unless you specify the constraint attribute. So if two nodes are linked and the engine can’t find an easy way to orient them horizontally, it’ll create a weird stair-step pattern, or swap node positions, or worse. So the rule is: if nodes are meant to be oriented vertically, add constraint="false" and it will magically fix everything. I’m not proud that it took several hours of experimenting, digging through github issues, and various documentation to figure this out. To be fair, that’s never explicitly stated anywhere as a handy tip, so you heard it here first.

Future Steps

I still have a lot of work to do. Once I’ve finally swapped out the final bits of hardware and gotten them working, there’s still the job of actually installing TrueNAS SCALE, adding all of the apps to coordinate my cluster, migrating everything off of the R720, and anything else I can cram in there. Then I need to decide what to do with the R720 itself. It’s not quite old enough to be considered e-waste yet, but I wouldn’t be able to sell it for enough to even cover the cost of shipping the behemoth. Heck, I still have my R710 for similar reasons; there aren’t exactly many e-cyclers out here in the corn fields.

Anyone want a gently used R720 as a project machine?

Until Tomorrow