PG Phriday: Database Infrastructure

This PG Phriday is going to be a bit different. During my trip to Postgres Open this year, I attended a talk I had originally written off as “some Red Hat stuff.” But I saw the word “containers” in the PostgreSQL in Containers at Scale talk and became intrigued. A few days later, I had something of an epiphany: I’ve been wrong about servers for years; we all have.

That’s a pretty bold claim, so it needs some background.

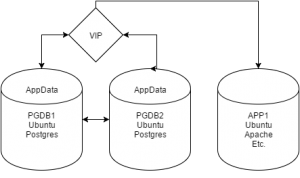

Fairly often in infrastructure and development, we see servers like this diagram:

But what’s the most important thing in the diagram? Can you even tell? Well, everything except the AppData component can be replaced. The primary goal of highly available design is building an architecture to ensure this is the case. I don’t care where the data lives, or what server is hosting it, so long as certain performance metrics are supplied by the underlying hardware. But from that diagram, the most important element would appear to be the servers themselves.

This kind of thinking is a trap, and one pretty much everyone I’ve met in the industry is guilty of falling into. In a two-server Postgres stack, where one server is the primary database and the other is a replica, we tend to gravitate toward preserving the original 1->2 orientation. This may be the case even if retaining this arrangement requires a second failover after an outage or some kind of maintenance. For some reason, the physical server gets the focus. How many times have you seen this? I can’t even count the number of times I’ve encountered servers named after their primary role as a database host, yet I have to physically log in to find out what the databases are. Sure, sometimes there’s a Wiki page of some kind outlining the data to host relationship, but is that really putting the data first?

That’s what I mean when I say we’ve all had it wrong. This obviously isn’t absolutely true, but the prevailing momentum still treats servers as important. They’re not. They’re a medium for whatever is running on them. Businesses with dozens or hundreds of servers and VMs already realize abstraction is necessary, but even here, the focus is misplaced. How many companies use Chef, Puppet, Ansible, Bcfg2, or some other tool to manage server configurations? Every one of these systems needs a master list of servers. Each server is given a semi-permanent profile.

Coordinating servers en masse with such tools is much easier, yet my AppData is still on Postgres1, and my Java app is still on JSRV1 through JSRV34. These are things we have to know, because deployment tools need them, and ad-hoc monitoring views need them, and we need to know why JSRV14 fell over and died. We have spare servers, so we create JSRV35. After months or years of this, the server names resemble an old blanket, full of holes and arbitrary assignments. I need to check on FooDB. That’s on Postgres1 and Postgres8, right? Why does the dev staff, DBA staff, or even the infrastructure team need to know any of that?

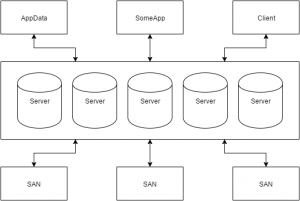

What I would love to see is something like this:

What server is the data being hosted on? It doesn’t matter. How do I get to the system for diagnostics or forensics? An alias or a dashboard. Can you at least tell me where the data is? A SAN. How do I get to the Postgres database? An alias. What alias? How about appdata-prod?

But why? What does this design provide that actually improves anything? That’s subjective to a degree, but some of the major benefits are centered around management.

- Is an assigned server misbehaving? Cycle it back into the pool and allocate a replacement.

- Is an application repeatedly having resource problems? Change the requirement list and reprovision.

- Are there persistent issues even after replacing servers? Investigate and modify the software or base container and test in iterations until a stable profile emerges. Then reprovision.

- Did the database fail over? Allocate a replacement replica and investigate the cause on the original primary. If it’s not hardware related, recycle it into the pool or deallocate the resources.

- How do I clone FooDB? Press the “Clone FooDB” button.

By advocating a transient nature, we can focus on data and application roles, and not where they live. What do we want from our Postgres database? The data itself, necessary extensions, good performance, and the ACID guarantee. None of that requires us to know FooDB is on lp4cc1pgsql12 today and lp4cc3pgsql8 tomorrow. We should never have to type that, look it up, or even know it exists.

Yet it’s easy to say something should exist. How do we actually get there?

I’ve been watching things like Docker and LXC for a while, but without a management system, they’re effectively just low-overhead VMs. In some cases, they’re actually worse or even useless for databases due to how they handle disk storage. Things like Kubernetes persistent volumes help solve that issue, though there is inherent overhead caused by the fact the storage is considered external and must be accessed through network traffic. For larger servers, this is almost expected; how many systems handle storage with a SAN and several LUNs? Local storage is almost exclusively used for the operating system these days, especially in the era of VM penetration.

But again, that’s too low-level. Creating and managing containers isn’t really that different from doing so with VMs, except that it’s probably a different set of tools. Containers negate the requirement for configuration management, but now we need to coordinate container profiles and instances. Moving from bare hardware and VMs to containers just moves the problem. We need a hardware mapping system, a management console and tooling, and a bunch of other stuff to really make containers work at a higher level.

This is starting to dangerously resemble a corporate cloud infrastructure, because it is. While solutions like Heroku and AWS are great fox external-facing applications and databases, quite a few companies build internal software that is never used by anyone outside of the organization. In those cases, all data is internal, the platform is local, and latency is necessarily low. If software needs to churn through vast amounts of data on a parallel stack, or the Postgres database is 30TB of distributed shards, that’s a lot of servers to manage.

I manage over 100 Postgres instances, and 80% of those are replicas that could be replaced by containers. I wrote a Django application to handle synchronization, DR failover needs, and basic provisioning, yet none of that code should have been necessary. In a container world, a clone is allocated when the container is instantiated. If it falls out of sync for some reason, it gets recycled into the pool and reallocated to fit the requirement of having thirty of them. A hyper-elastic system like this is much harder to kill, gives better uptime, and even addresses horizontal scaling concerns more consistently.

Given all of this, it should be no surprise that several management platforms have emerged to remove the suck-factor from handling containers. The Postgres Open talk was about Red Hat OpenShift, but there’s also Pivotal Cloud Foundry, and Apache Stratos. Why just these? The distinction here is that all of them are considered Platform as a Service (PaaS) instead of Software (SaaS) or Infrastructure (IaaS). Most existing corporate cloud systems are IaaS, making it easy to quickly deploy a lot of VMs. By adding the platform layer, devs and DBAs alike can spin up entire stacks necessary to implement an entire platform consisting of any number of software or hardware prerequisites.

If a trading app needs Django on top of nginx serving from a local clone of the current market symbol pricing database, that’s an easy deployment. Even if there needs to be 50 of them, the Postgres database cloning and replication process would be the same. Nobody has to build that stack. Nobody has to modify the configuration management system to install system packages and add the new server to the deployment tool, then cut and paste from a Wiki to install the actual app and launch everything in the proper order. The fact I even wrote that sentence says a lot about how servers are handled in the current infrastructure climate at many organizations.

The role of Postgres in this is simple: be Postgres. As just another part of the stack, with built-in replication capabilities and a transient nature tied to the PGDATA directory, it already matches this model. Our Postgres data needs a place to live, and a place to execute. SAN hardware tends to provide a lot of extended functionality like deduplication, snapshots, and CoW, all of which greatly simplify data cloning and deallocation. Containers tied to such data clones are equally disposable, and make that data available to applications and end users without much regard to the underlying hardware.

I want to care about Postgres and my data, not how I get to it. From now on, my primary goal at work will be to make this a reality. Even if that doesn’t mean a PaaS deployment, virtual host names and tools like pgpool can do some of the heavy lifting to hide physical servers. We all do too much work to have to remember a bunch of arbitrary server names, so why do it at all?