PG Phriday: MySQL Mingle

Through the wonderful magic of corporate agreements, I’ve been pulled back into (hopefully temporarily) managing a small army of MySQL servers. No! Why can’t this just be a terrible nightmare?! Does anyone deserve such debasement?

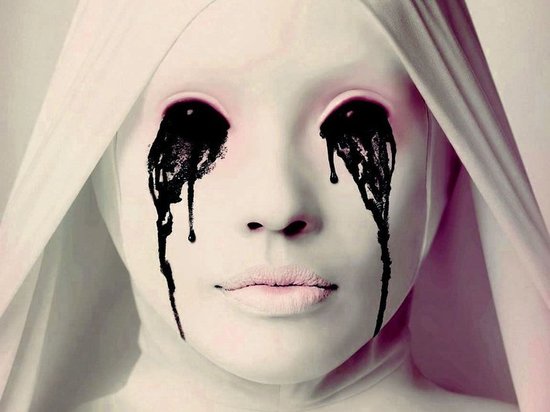

Side effects of using MySQL may include…

Hyperbole? Maybe a little. If MySQL was really that terrible, it wouldn’t be in such widespread use. However, as a Postgres DBA for so many years, I’ve come to appreciate what really sets it apart from engines and development approaches like those showcased in MySQL.

Let’s explore a few of these.

Privileges

MySQL user management is an unholy abomination. As ridiculous as it sounds, this is no exaggeration.

This is how to create a superuser in Postgres:

CREATE USER some_bozo WITH PASSWORD 'cowsrule' SUPERUSER;

And here’s the same thing in MySQL:

CREATE USER 'some_bozo'@'%' IDENTIFIED BY 'cowsrule';

GRANT ALL ON *.* TO 'some_bozo'@'%';

That’s not so bad, right? Syntactically no, but the implication of the @ symbol can’t be ignored. Consider these two users:

CREATE USER 'just_a_girl'@'127.0.0.1' IDENTIFIED BY 'justwhy';

CREATE USER 'just_a_girl'@'192.168.56.10' IDENTIFIED BY 'you suck';

Yes, that’s two distinct users. It just so happens that the second IP address is for the local VM running MySQL itself. Depending on the host specified in the connection string, we would need to supply a different password to authenticate. In older MySQL installations, it’s not uncommon to accumulate dozens of entries for the same user. Occasionally one or more of these users will have a different password from the rest.

What about roles? Well, MySQL doesn’t have them. Postgres DBAs generally recommend managing grants through role assignment. Users can be transient, so granting direct access to database objects isn’t very transferable. Imagine we have two tables out of one hundred, meant especially for reporting. We could do this in Postgres:

CREATE ROLE bi_role;

CREATE USER angie WITH PASSWORD 'sillygoose';

CREATE USER fred WITH PASSWORD 'dinotopia';

GRANT SELECT ON tab_a TO bi_role;

GRANT SELECT ON tab_b TO bi_role;

GRANT bi_role TO angie;

GRANT bi_role TO fred;

The bi_role role can be granted to anyone who takes on that job title, be it a single person, or a whole team. They all have access to the same objects, and we don’t have to micro-manage the grants to each individual user. Here’s the same thing in MySQL:

CREATE USER angie@'%' IDENTIFIED BY 'sillygoose';

CREATE USER fred@'%' IDENTIFIED BY 'dinotopia';

GRANT SELECT ON test.tab_a TO angie@'%';

GRANT SELECT ON test.tab_b TO angie@'%';

GRANT SELECT ON test.tab_a TO fred@'%';

GRANT SELECT ON test.tab_b TO fred@'%';

Not so bad with two tables, but this compounds geometrically as the count of tables or team-members increases. As a consequence, it’s often easier to simply grant access to everything in a database, regardless of how appropriate that may be.

Beta Bumps

Quick! Can anyone figure out when the GTID feature was added to MySQL? It’s a huge improvement and makes things like multi-master possible, as well as enabling an entire tool chain that imparts functionality like automated failovers. It’s 5.6, right?

Wrong. It’s 5.6.5.

Let that sink in. Really let it simmer. I was outright incredulous when I realized what happened here. A critical feature introduced in a dot release, something normally reserved for bug fixes and security vulnerabilities. There’s a good reason most software projects do it that way, and the question that introduced this section is the first clue.

According to the MySQL documents, 5.6.5 is a Milestone release, and this is what they say about Milestones:

This is a milestone release, for use at your own risk. Significant development changes take place in milestone releases and you may encounter compatibility issues, such as data format changes that require attention in addition to the usual procedure of running mysql_upgrade. For example, you may find it necessary to dump your data with mysqldump before the upgrade and reload it afterward.

What they don’t say is that Milestones are part of their alpha, beta, and release candidate process. The first “real” version of 5.6 was really 5.6.10. Surprise! Hopefully nobody has a legacy system running 5.6.8 somewhere, thinking it was a general release.

What version is the first version of Postgres 9.6? 9.6.0. That’s important. There’s no ambiguity in that release designation.

Am I overreacting? Probably. I’m still in a bit of shock, and first reactions aren’t always sane.

Replication

This is one place MySQL may still maintain a slight advantage over Postgres. Postgres unequivocally has more accurate and stable replication with its binary transaction log stream. A replica really is a replica in every respect, right down to which page data is written to. Except… well, there’s a question of utility.

MySQL replication is more like database-wide logical replication. It’s as if pglogical were an integral part of Postgres. Don’t want to replicate a specific database in the instance? Exclude it. Don’t need certain tables? Ignore them. This is one reason MySQL is so popular for sharding. Using this kind of partial replication, shards can be distributed across a whole fabric of MySQL servers, with designated alternate locations.

Combine that with replication chaining, and you get a circular architecture of failover candidate shard masters. It’s multi-master without an explicit need for a global transaction ID. Of course by adding such functionality in 5.6, these types of replica arrangements become more robust.

Can Postgres do anything like that? Kinda. The citus extension is probably the closest approximation regarding distribution model. Tables can be selectively broadcast to various candidate servers, and shards can have a configurable amount of spare copies spread through the cluster. But all queries have to go through the central master, as it retains the full mapping of active shard locations. It’s a way to distribute data and parallelize queries, not to multi-master.

There’s the BDR extension, but it’s a replication strategy instead of a distribution technique. Every master must contain all data in the cluster. Short of using UNLOGGED tables, there’s no exclusion process. Yes it’s multi-master, but instead of spreading 5TB across 10 nodes, we end up with 10 5TB nodes. Yuck. BDR is also incompatible with foreign data wrappers, a hallmark feature of Postgres since 9.3. In fact, there’s a whole boatload of restrictions that drastically reduce Postgres’ capabilities.

This also ignores DDL. Not a single Postgres logical replication system will properly replicate a ‘CREATE TABLE’ statement, for example. Considering these statements are in the transaction log, as they must be in order to appear on the primary system, I’m not entirely sure why this is the case. Regardless, there’s no direct equivalent to MySQL’s replication system and its relative flexibility at this time. Even though MySQL’s replication is basically just a logical replay mechanism, being tightly interwoven into the core makes a massive difference in potential.

Sunny Side

Is there any way Postgres can save me from this torment? Is there anything I wish Postgres had? Oddly enough, the answers are no and yes respectively. This is something of a surprise, as I was fully expecting to hate every aspect of MySQL after giving it up back in the 3.23 days. It still has a lot of the old gotchas, but others have naturally evolved with the platform.

User management on MySQL systems is an utter nightmare I wouldn’t wish on my worst enemy. Due to MySQL’s replication system being logical, many tools don’t work without the GTID feature. In some cases, this may mean across-the-board upgrades before reliable high availability is even an option. On extremely old platforms, it’s generally better for existing database instances to continue as MySQL systems. There’s a reason why WordPress is still officially MySQL only. So I’m stuck with these servers for the duration.

On the other hand, MySQL replication is something I wish Postgres had an equivalent for. Not because the Postgres binary approach is bad or incomplete, but because of the flexibility a fully logical approach bestows. It would be a chore to deploy and probably maintain, but it’s entirely possible to create a five node cluster and distribute ten shards across them at two shards each. Add in replication and one shard from each node could exist on two additional nodes. That’s an impressive level of redundancy. And each shard is fully read/write on their active tables, while all the replicas are read capable.

It would require a responsible access layer to decode active shard locations depending on the current mapping, but those already exist. This is definitely an area where Postgres could grow. A Postgres extension could doubtlessly make it happen if there was enough demand and backing. Or maybe there’s already something in the works that hasn’t yet been released.

Either way, I plan on getting these new MySQL servers whipped into shape so they can be left to their own devices with little further intervention. I’m still a proud Postgres DBA, after all.